I’ve been working on the ECARF research project for the last few years addressing some of the Semantic Web issues, in particular processing large RDF datasets using cloud computing. The project started using the Google Cloud Platform (GCP) – namely Compute Engine, Cloud Storage and BigQuery – two years ago and now that the initial phase of the project is complete, I thought to reflect back on the decision to use GCP. To summarise, the Google Compute Engine (GCE) per minute billing saved us 697 hours, an equivalent of 29 days, a full month of VM time! Read on for details on how these figures were calculated and for my reflections on 2 years of GCP usage, starting 1,086 VMs programatically through code, completing 100s of jobs on a 24,538 lines-of-code project. Note, I will occasionally abbreviate Google Cloud Platform as GCP and Google Compute Engine as GCE.

Per-Minute vs. Per-Hour Cloud Billing

Contents

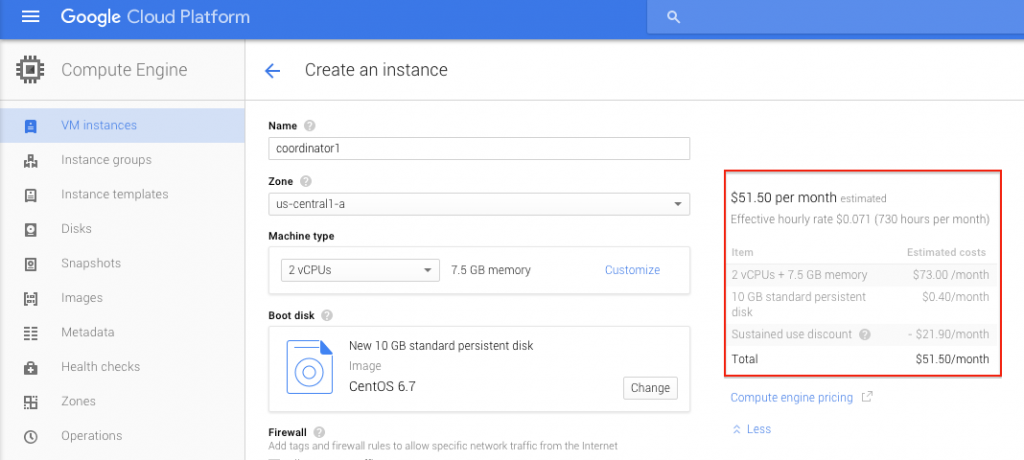

The ECARF project’s workload that we have processed on the cloud exhibits a short irregular nature, for example, I could be starting 16 VMs with 4 cores each for 20 minutes, 1 VM with 4 cores for 15 minutes and another 1 VM with 2 cores for 1 hour 10 minutes (a total of 70 cores) to process various jobs, once the work is done these VMs get deleted. Let’s do some math (ignoring the pricing tier for now), on per-minute billing I would pay for (16 x 20 + 1 x 15 + 1 x 70 = 405 minutes) 6 hour 45 minutes of usage, on a per-hour billing however, you will pay an hour each for those 16 VMs (tough) that is 16 hours. The total in this case is 19 hours! That is 12 hours 15 minutes of waste!

![]()

So the first thing that came to mind is to evaluate how much the project have saved by the Google Compute Engine (GCE) per-minute billing compared to using a cloud provider that charges per-hour. The figures are astonishing!, in the last year and a half we have started 1,086 Virtual Machines, the majority for less than an hour. The total billable hours with GCE’s per-minute billing are 1,118, if we were using a cloud provider that charges per hour then the total billable hours would be 1,815. This means per minute billing saved us 697 hours, an equivalent of 29 days, a full month of VM time! Now this is a one developer project, imagine an enterprise with hundreds of projects and developers, the wasted cost with per-hour billing could well amount to thousands of dollars.

Appreciating the Saved Hours

I was interested in Cloud computing since 2008 and started using Amazon Web Services back in 2009. So when the prototype development phase started on the ECARF project two years ago, I didn’t have any experience on Google Compute Engine (GCE), my only experience was with Google App Engine. However, after watching a few announcements of new GCP features on Google IO and Cloud Platform Next, I thought there were too many cool features to ignore, one in particular was the ability to stream 100,000 records into Google BigQuery. So I decided to try GCP for the prototype development; I spent a couple of hours reading the GCE documentations and playing with code samples and in no-time I created and destroyed my first few VMs using the API, things were looking good and promising so I never looked back.

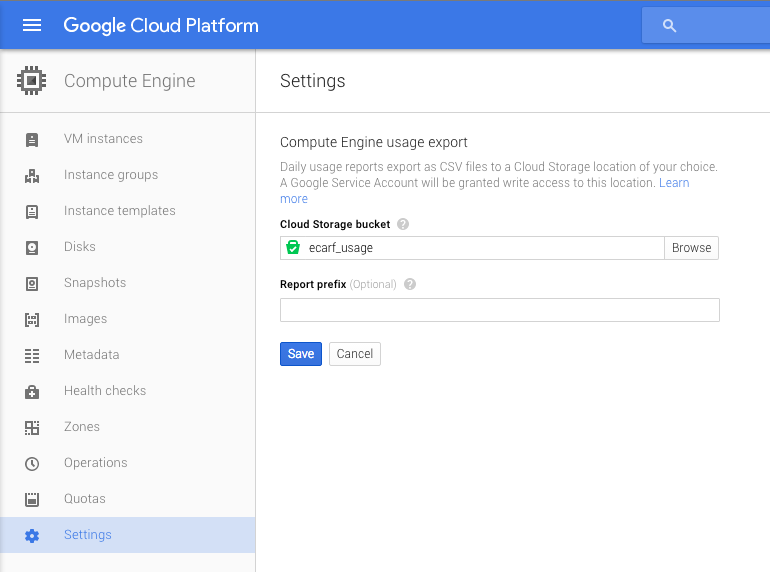

Luckily, I’ve had the Compute Engine usage export enabled from day one. So all the project’s Compute Engine usage CSV files since the start are available in a Cloud Storage bucket. These includes daily usage and an aggregated monthly usage files, so when analysed we either analyse the daily or the monthly ones, otherwise everything will get counted twice.

To see how many hours we have saved with GCE per-minute billing, I’ve written a small utility to parse these CSV files and aggregate the usage. Additionally, for VMs usage, the tool will round up GCE per-minute usage to per-hour usage as shown in the code snippets below. By the way the tool is available in GitHub if you would like to appreciate your savings as well. So how does it work?, each time a VM starts the usage files will contain a few entries like these:

| Report Date | MeasurementId | Quantity | Unit | Resource URI | ResourceId | Location |

|---|---|---|---|---|---|---|

| 10/03/2016 | com.google.cloud/services/ compute-engine /NetworkInternetIngressNaNa | 3,610 | bytes | https://www.googleapis.com… /cloudex-processor-1457597066564 | .. | us-central1-a |

| 10/03/2016 | com.google.cloud/services/ compute-engine/ VmimageN1Highmem_4 | 1,800 | seconds | https://www.googleapis.com… /cloudex-processor-1457597066564 | ….. | us-central1-a |

The entry we are interested in is the one that contains the VmimageN1Highmem_4 measurement Id with its quantity and units in seconds. In the highlighted entry an n1-highmem-4 VM was started and ran for 30 minutes (1,800 seconds). With a cloud provider that charges per hour that entry is immediately rounded up to an hour (3,600 seconds). As shown in the code snippet below, for each of these entries to generate the per-hour billing values the tool will round up anything below 3,600 seconds to a full hour. Additionally, if an entry is more than 3,600, say 4,600 seconds, we check if the remainder of 4,600 by 3,600 is larger than zero, if it’s, we divide the entry by 3,600, round up the result and multiply it by 3,600 i.e. 4,600/3,600 = 1.27, rounded up to 2, so total billable on per-hour charges is 2 x 3,600 = 7,200 seconds. This needs to be done for each of the entries individually.

Here are the generated results after exporting it into Google Sheets and converting the seconds to hours:

| VM Type | Usage (hours) | Usage per-hour billing (hours) |

|---|---|---|

| VmimageCustomCore | 11.47 | 12 |

| VmimageF1Micro | 27.05 | 32 |

| VmimageG1Small | 87.50 | 104 |

| VmimageN1Highcpu_16 | 3.42 | 5 |

| VmimageN1Highcpu_2 | 1.57 | 2 |

| VmimageN1Highcpu_4 | 1.47 | 2 |

| VmimageN1Highcpu_8 | 2.52 | 4 |

| VmimageN1Highmem_2 | 35.47 | 137 |

| VmimageN1Highmem_4 | 262.53 | 446 |

| VmimageN1Highmem_8 | 1.02 | 3 |

| VmimageN1Standard_1 | 49.00 | 65 |

| VmimageN1Standard_16 | 0.58 | 3 |

| VmimageN1Standard_2 | 625.43 | 977 |

| VmimageN1Standard_4 | 4.33 | 12 |

| VmimageN1Standard_8 | 4.65 | 11 |

| Total | 1118 | 1815 |

Notice for the n1-standard-16 VM highlighted, which we have started many times, the total usage amounts to just over half an hour on GCE, the equivalent is 3 hours on per-hour billing! In this case we have started the n1-standard-16 for 600, 720 and 780 seconds respectively, in per hour billing that is an hour each.

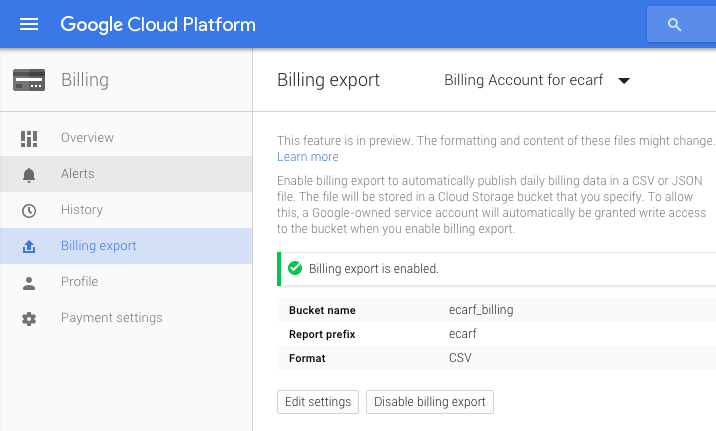

On a separate note, Google Cloud Platform billing export is currently in preview, I’ve had a brief look at the exported CSV file and they are the best of both worlds. They contain the detailed usage included in the usage export, but with cost values and not just for GCE, but all of the Google Cloud Platform.

The Modern Cloud

If you ask me to describe the Google Cloud Platform (GCP) in one word, I will use the word ‘Modern’, because GCP is a modern cloud that challenges the status quo with cloud computing, if you ask me for a different word you will get ‘Cool’. My argument for why I believe GCP is a modern cloud can be summarised as follows, I will explain each one of them:

- Highly Elastic Cloud

- Flexible Billing Models

- Clear And Well Structured Infrastructure as a Service (IaaS) offerings

- Robust and Efficient Authentication Mechanisms

- Unified APIs and Developer Libraries

Back in 2008 cloud computing was a revolution compared to dedicated physical server hosting. Back then if you hired a dedicated server you had to commit to a full-month billing, then cloud computing came with the revolution of hourly billing. However, cloud computing in 2016 shouldn’t be like cloud computing in 2008. Hardware got cheaper (following Moore’s law), hypervisors improved considerably. Now the revolution should be: the move from hourly to sub-hourly billing.

Highly Elastic Cloud

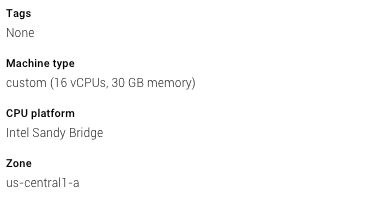

There is currently huge research into improving hypervisors and cloud elasticity, specially vertical elasticity. I won’t be surprised if in the near future we are able to dynamically scale up or down the memory or the CPU cores of a VM whilst it’s running. A modern cloud will be more elastic than treating VMs like physical dedicated servers, I should be able to define my own CPU and RAM requirements rather than having to choose from a predefined list (within reason obviously). I should be able to freely change the metadata of my VM at any time not just at startup. These are modern features that the ECARF project couldn’t do without and both are offered by GCE. Such features allow us to build cool modern systems that combine elasticity with machine learning techniques to offer dynamic computing for example.

Flexible Billing Models

The profile of the ECARF project’s workload isn’t unique, many other areas have a similar workload profile, continuous integration development environments that acquire VMs to build code and run tests might not run for a full hour. Another area is prototyping or research and development of cloud based systems, which might start and terminate VMs on a regular basis.

GCE ticks the box by offering modern and flexible billing models, for both irregular and regular, short-term and longterm workload profiles through per-minute billing, sustained usage discounts and Preemptible VMs. And the 10 minutes minimum charge is only fair, because creating a VM involves many steps such as scheduling and reserving capacity on a physical host and those come with a cost.

Not only this, but GCP goes further, if you feel that any of the predefined machine types (CPU / RAM combination is more than what you need, then you can launch your own custom VMs that best fits your workload, achieving savings at multiple levels.

Clear And Structured IaaS Offerings

Over the past couple of years, I’ve never struggled to grasp or understand any of the GCE offered services. The predefined machine types are also very clear, shared core, standard, high memory and high CPU, I know them all by heart now with their memory configurations and pricing to some extent. I clearly understand the difference between the IOPS for a standard disk and an SSD disk and how the chosen size impacts their performance. The information is well structured, there is no information overload, disk pricing and other advanced information like processor architecture, etc… is kept separate. So at first glance, the pricing page is simple and easy to understand, then if you are interested in a deep dive you can follow the links to more advanced contents.

Robust and Efficient Authentication Mechanisms

OAuth2 makes it really easy to utilise GCP APIs and is easier to use for developers. I’ve been through the authentication keys and certificates hell, which ones are valid, which ones have expired, oh wait someone committed them by mistake to GitHub!. My experience with OAuth2 is that it’s much cleaner, you authorise the resources to act on your behalf, no one keeps any keys or passwords, the resource can then acquire an OAuth token when needed and you can revoke that access at any time. Now with Cloud Identity and Access Management, there is a robust authentication and access management framework in place that works well both for the enterprise and for developers.

One use case that made our lives easier working on the ECARF project, is that we treat VMs as ephemeral, they get started to do some work and then get terminated. I don’t want to worry about copying some keys or certificates over to a VM so it can access the rest of the cloud provider APIs. Shouldn’t it just be enough for me to delegate my authority to the VM at startup and never have to worry about some startup scripts that copy security keys or certificates, then find a mechanism through environment variables or similar to tell my application their whereabouts. I could package these with my application, but the application is either checked out from GitHub (public repo) or packaged in the VM image, in which case, keys and certificates management becomes hell. Through service accounts we are able to specify which access the VM gets, and once the VM is started it contacts the metadata server to obtain an OAuth token which it can use to access the allowed APIs.

Unified APIs and Developer Libraries

Google offers unified client libraries for all their APIs with a mechanism to discover and explore these APIs making it easier for developers to discover, play with and utilise them. Their usage patterns are the same, if you have used one, you can easily write code for the other. With OAuth2 support, this gives developers a mechanism to try and invoke these APIs in the browser using the API Explorer without writing a single line of code, so I can plan and design my App accordingly. If there are any caveats, I’m able to discover them earlier, rather than after writing the code.

Additionally, Google APIs use JSON rather than XML, a few others have assessed the cost of transport of XML vs. JSON so there is no need to repeat it here. When dealing with cloud APIs and sending or retrieving large amounts of data, to serialize, compress and transport such data, literally every second counts! In this case JSON is less verbose and achieves better compression ratio with less CPU usage. Forget JSON, and welcome the General RPC framework. The basis for all Google’s future APIs utilising HTTP/2 and Protocol Buffers, now this is a step in the future.

Summary

To wrap up, we have saved a month of VM time by using Google Compute Engine with its per-minute billing. In this article we have only looked at the saved hours, but haven’t investigated the actual cost savings compared to per-hour billing, which I will leave for a future article. Additionally, by using the modern cloud features offered by the Google Cloud Platform we were able to save time and focus on what we needed to build and achieve.

All opinions and views expressed here are mine and are based on my own experience. Follow @omerio