Introduction

Contents

- 1 Introduction

- 2 Example Multi-tier Web App

- 3 Optional interlude: Container Patterns

- 4 Creating the Kubernetes Cluster

- 5 The kubectl Command

- 6 KubeUI – The Kubernetes Web UI

- 7 The Cluster Configuration Files

- 8 Deploying the Pods

- 9 Testing the Replication Controller

- 10 Deploying the Backend Service

- 11 Deploying the Frontend Service

- 12 Verifying Services Load Balancing

- 13 Clean Up

- 14

- 15 Conclusion

This post will provide a hands-on introduction to using Kubernetes on Google Container Engine. This post is a followup from my previous post on understanding the key concepts of Kubernetes.

We will deploy a multi-tiered (frontend and backend) web application cluster on Google Container Engine. Although we are going to deploy a specific web app the concepts explained here are generic and can be applied to any other apps. We will also cover the following topics:

- A quick introduction to the web app we are deploying.

- How to create a Kubernetes cluster on Google Container Engine.

- Deploying containers and Pods using Replication Controllers.

- Testing the functionality of Replication Controllers

- Deploying Services to facilitate load balancing

- Testing the functionality of Services.

All the step covered in this post are also in this YouTube video if you prefer watching instead.

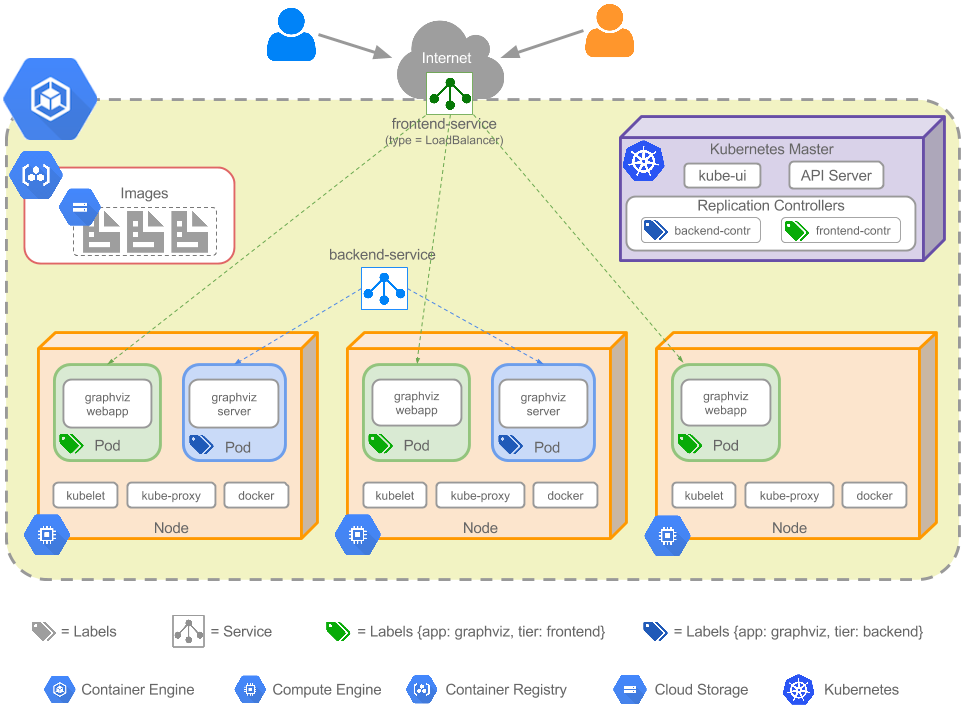

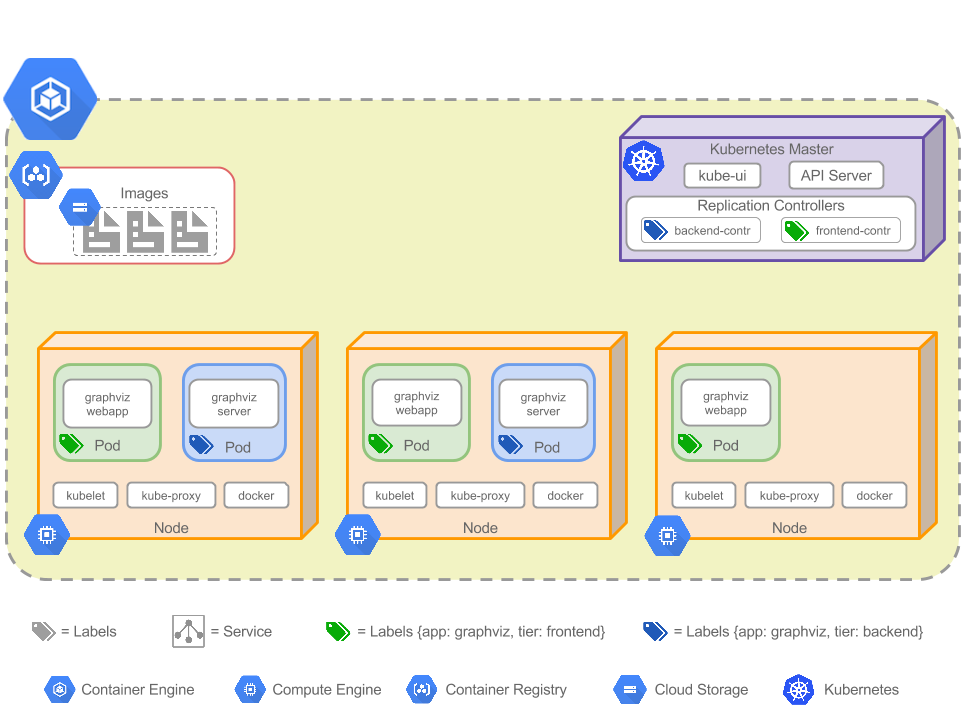

The end game is to have a cluster like this running on Google Container Engine. Don’t worry about the details for now, we will go through building up the cluster gradually in this post until we get to this final state.

Example Multi-tier Web App

We will go through deploying the following web application cluster:

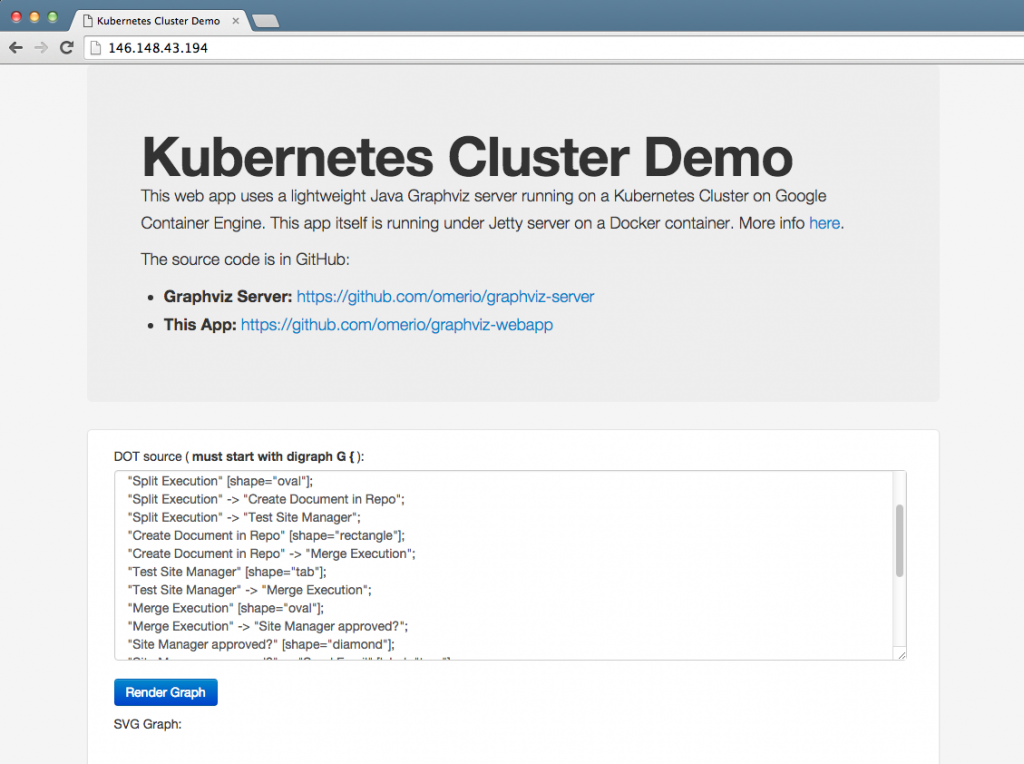

The cluster has three frontend containers running the Jetty server with a simple web application. The cluster also has two backend containers that run a simple HTTP server and have the Graphviz application installed. Graphviz is a powerful open source graph layout and visualisation tool. The graphs are specified in DOT language, which is a plain text graph description language.

You can try out a non containerized version of the web app here. The user pastes their DOT text on the frontend and clicks ‘Render Graph’ button, this fires an AJAX call to the Jetty server which sends a request to one of the backend graphviz-servers to render the graph. The final graph in SVG is then rendered to the user.

I’ve the Docker images for both the frontend and backend applications deployed to the public Docker public central registry and also have them deployed to my private Google Container Registry. The source code, Dockerfiles, and public images for both apps are summarised in this table:

| Frontend (graphviz-webapp) | Backend (graphviz-server) |

| Source code | Source code |

| Dockerfile | Dockerfile |

| Docker image | Docker image |

Optional interlude: Container Patterns

You can skip this section on first read, but I thought it’s worth mentioning. As people started to use containers more often to solve particular problems there was a desperate need for some sort of patterns that can be reused to address particular container challenges. Think the “Gang of Four” design patterns for software development. Also you might be asking yourself, if our frontend container image is using the best approach?, after all the Jetty server and the WAR file (Web Archive) are both lumped together in the same image. This means each time we have a new WAR file we have to a build a new image, it would be nice if we can separate the WAR deployment from the Jetty container so that it’s reusable.

Enter container patterns, in this case we could use a “sidecar” pattern to address this problem. The Kubernetes examples include a similar example to ours with a sidecar container for deploying the WAR file. There is also a great post on the Kubernetes blog explaining some of these container patterns.

Creating the Kubernetes Cluster

Ok, let’s get started. First we head over to the Google Cloud Console and create a new project. Then from the Gallery menu on the left hand side we make sure to visit Container Engine and Compute Engine services, this will automatically enable their APIs. Then we click on the Cloud Shell icon on the top right to activate it. For an introduction to Cloud Shell see my previous post.

First we set the Compute Engine zone. We list all the zones using the following Google Cloud SDK command:

gcloud compute zones list

Then we choose the us-central1-a

gcloud config set compute/zone us-central1-a

Next step we create a Kubernetes cluster:

gcloud container clusters create graphviz-app

By default if we do not specify the number of nodes and their types, then Container Engine will use three n1-standard-1 (1 vCPU, 3.75 GB memory) Compute Engine VMs for the cluster.

If we want to explicitly specify the number of nodes and their types then we would use the following command:

gcloud container clusters create graphviz-app \ --num-nodes 1 \ --machine-type g1-small

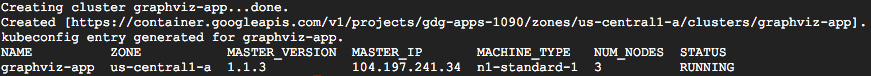

Once the command finishes executing we should see an output similar to this:

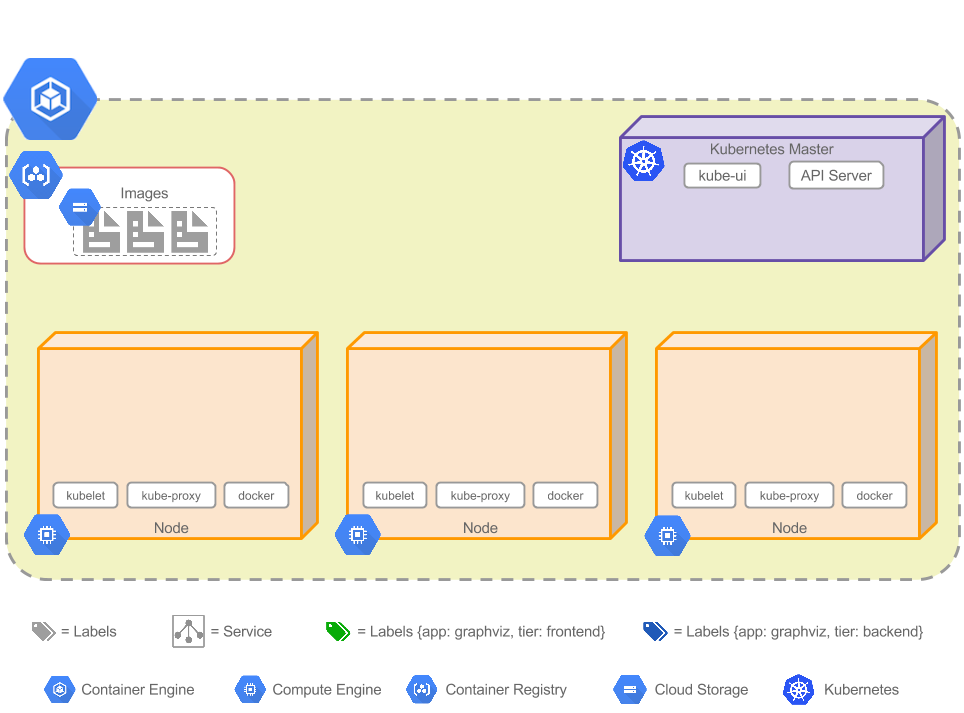

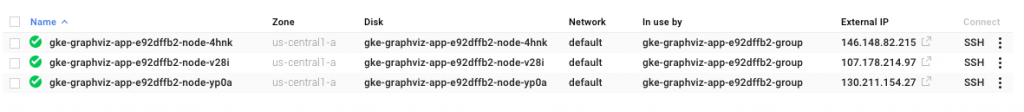

At this point Container Engine has created a Compute Engine cluster with the Kubernetes platform installed. The cluster will look something like this:

The cluster nodes are simply Compute Engine VMs so we can SSH to them and see them in the cloud console.

The Kubernetes Master however, is managed by Container Engine and we can not SSH to it. But, we can connect to a Web UI on the master as we will explain shortly. If you were deploying a Kubernetes cluster yourself then you need to manage both the Master and the nodes. When using Container Engine the master is automatically managed for us.

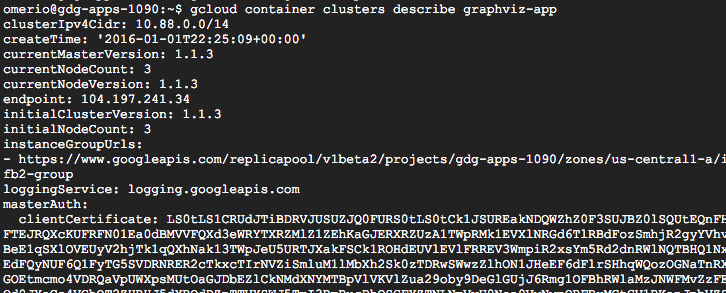

Now let’s describe the newly created cluster:

gcloud container clusters describe graphviz-app

The output will look similar to this:

You will notice toward the end of the output there is a username and password, we will use these shortly to connect to the Web UI running on the Kubernetes master:

The kubectl Command

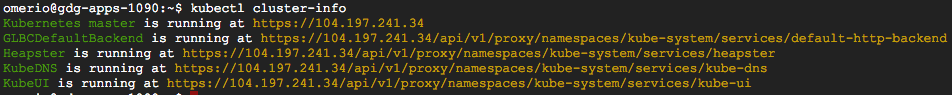

So far we have been interacting with the cluster using the Google Cloud SDK (gcloud). Since the cluster is fully setup with the Kubernetes platform we can start interacting with it by using the kubectl command. Let’s get the Kubernetes cluster information by running the following command:

kubectl cluster-info

The output should look something like this:

As you can see, the details contain the Kubernetes Master URL and other URLs for various Kubernetes services, one of which is the KubeUI which is the Web UI running on the Kubernetes Master. Before we have a look at the UI, you might be asking yourself how the kubectl command is able to find our current cluster. The kubectl command expects a .kube directory in the user’s home folder with a config file. Google Cloud SDK has already created a config file for us in .kube/config with all the details needed by kubectl to interact with the cluster.

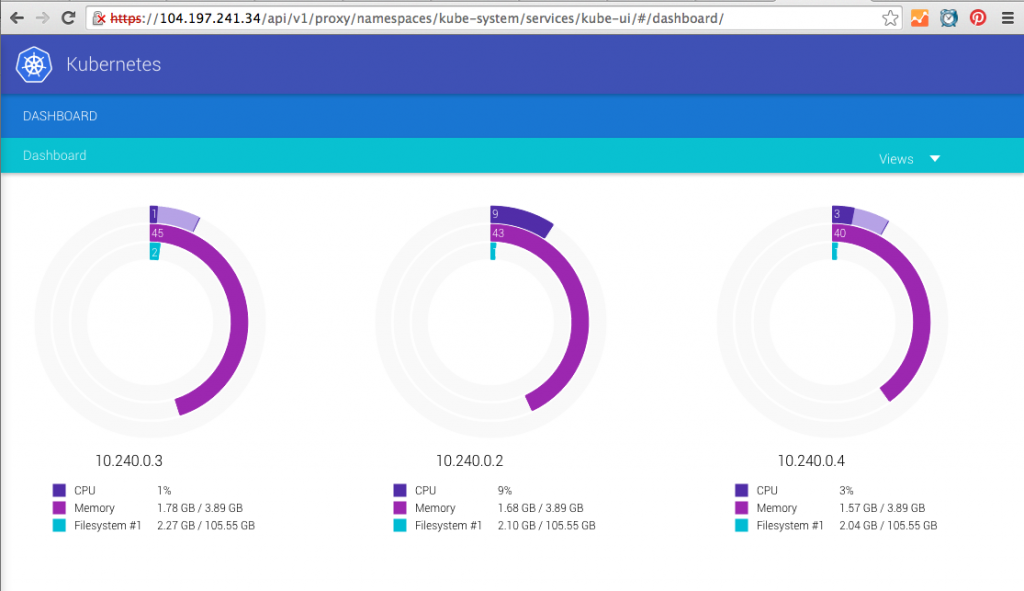

KubeUI – The Kubernetes Web UI

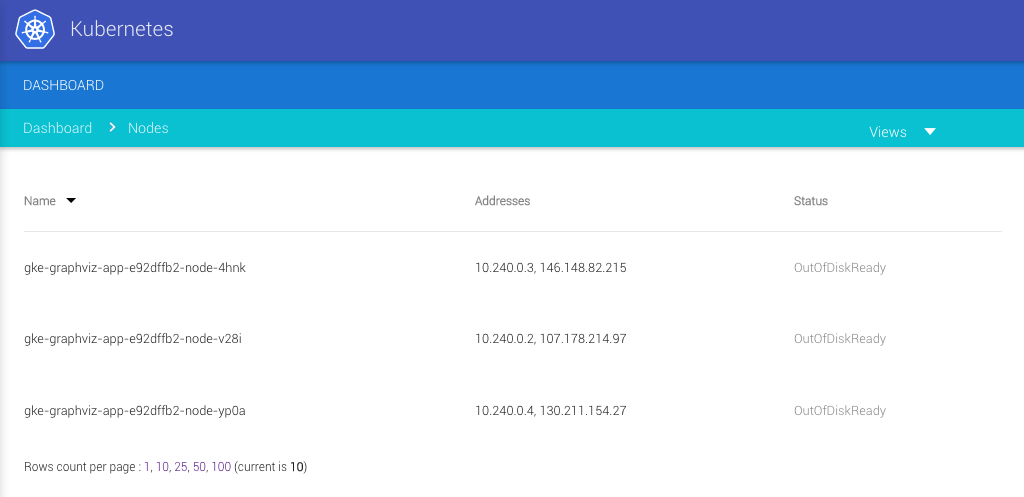

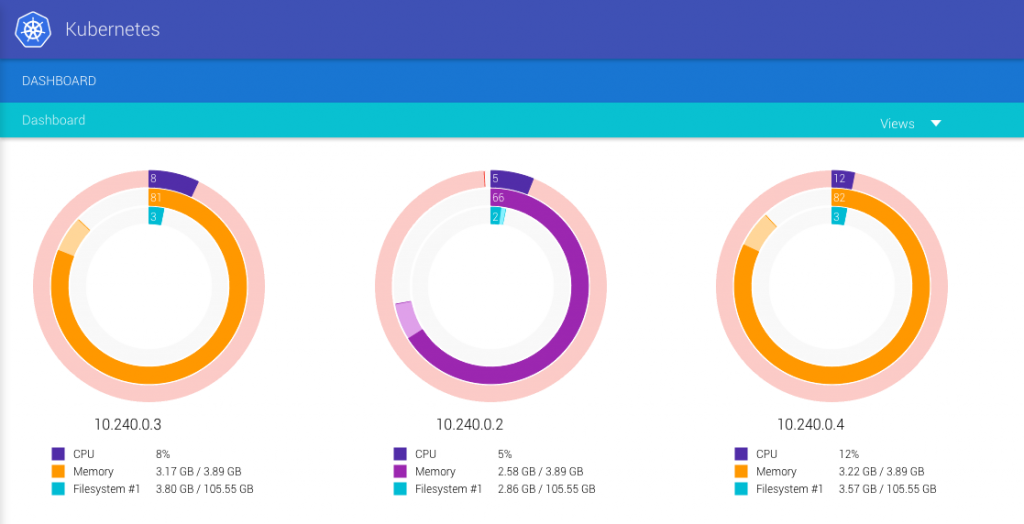

Let’s navigate to the KubeUI URL displayed on the output of ‘kubectl cluster-info’. We will need the username and password mentioned previously and we also need to ignore the certificate warning. The UI shows a graphical dashboard of the three nodes in the cluster

The Cluster Configuration Files

The configuration files for our cluster are in GitHub and contain the following four YAML files. Note Kubernetes configurations can also be written in JSON:

- backend-controller.yaml – Replication Controller “backend-contr” for the backend Pods, this will deploy two Pods.

- frontend-controller.yaml – Replication Controller “frontend-contr” for the frontend Pods, this will deploy three Pods.

- backend-service.yaml – Service “backend-service” to load balance the backend Pods.

- frontend-service.yaml – Service “frontend-service” an external load balancer for the frontend Pods that allows Web traffic.

The files have many comments explaining the various parts so we won’t explain the contents of these files in this post.

Deploying the Pods

First let’s checkout the Kubernetes configuration files for the cluster from GitHub:

git clone https://github.com/omerio/kubernetes-graphviz.git cd kubernetes-graphviz

The Replication Controller for the backend Pods is contained in the following file:

We deploy the Replication Controller and backend Pods by running the following command:

kubectl create -f backend-controller.yaml

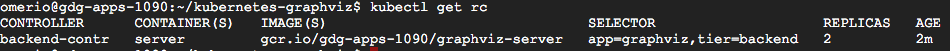

And verify that this Replication Controller is created

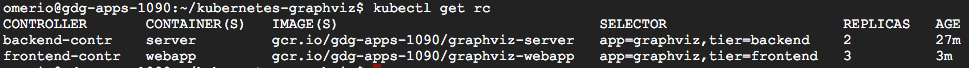

kubectl get rc

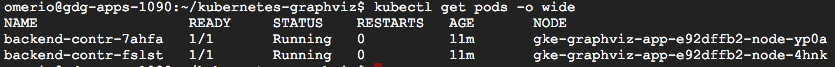

Let us also verify that two backend Pods are created. We can list all the Pods in the cluster using the following commands:

kubectl get pods -o wide

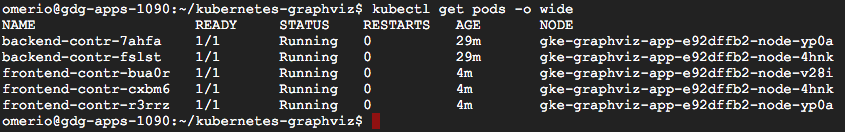

The output shows the two Pods and hostname of the nodes they are deployed to:

We can SSH to one of the two nodes (10.240.0.3 or 10.240.0.4) to confirm that the backend Pods are created by running the following command using the hostname of one of the nodes:

gcloud compute ssh gke-graphviz-app-e92dffb2-node-4hnk

Once we login to the node, we can view all the running Docker containers by running the following command:

sudo docker ps

The output shows the recently deployed backend Pod and its container:

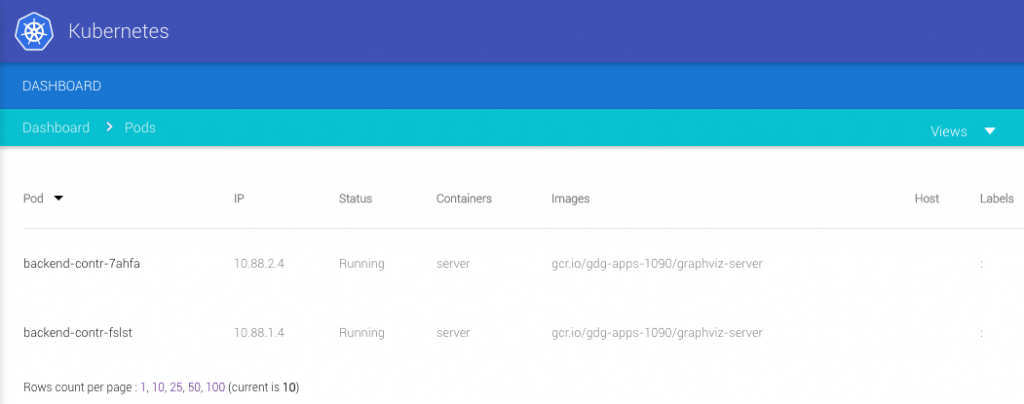

Looking at the Kube-UI dashboard it seems like the two Pods are created on Nodes 1 and 3, as evident from the memory usage on these two nodes and their previous CPU utilisations.

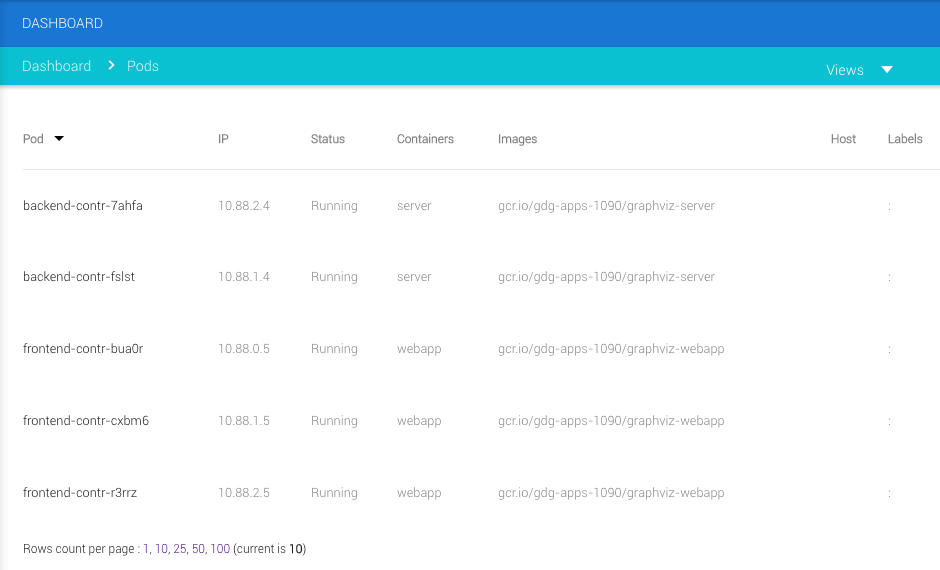

The Pods view also shows these two Pods and the nodes running them.

We now deploy the frontend Replication Controller and Pods.

kubectl create -f frontend-controller.yaml

Again the Dashboard nicely shows some increased CPU & memory utilisation on the nodes:

And we can also verify from the UI and by running the kubectl command that thre extra frontend Pods are created:

kubectl get rc

kubectl get pods -o wide

After deploying the frontend and backend Replication Controller, we have a total of 5 Pods and the cluster should look something like this:

Testing the Replication Controller

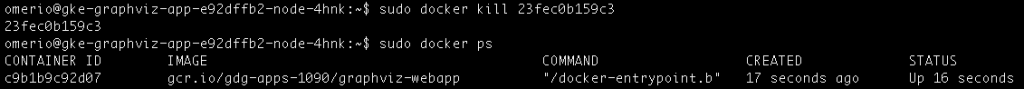

In this section we are going to test the functionality of the Replication Controller by explicitly killing one of the frontend docker containers. We SSH into one of the 3 nodes:

gcloud compute ssh gke-graphviz-app-e92dffb2-node-4hnk

We then get the id of the frontend container deployed on the node

sudo docker ps

Then we kill it

sudo docker kill 23fec0b159c3

Now we check the status of the current containers:

sudo docker ps

As you can see, after a short while the container we’ve killed has been replaced with a new one. Now let’s check the status of all Pods:

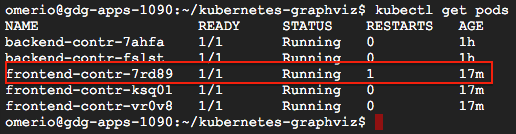

kubectl get pods

We can see that the Pod has been restarted once by the Replication Controller. We can also scale up Pods with the Replication Controller, for example to create 2 additional frontend Pods we simply run:

kubectl scale rc frontend-contr --replicas=5

Deploying the Backend Service

Now let’s deploy the backend service to facilitate DNS name resolution and load balancing between the two backend Pods. In our frontend container the Web app running on the Jetty server simply uses the following URL to refer to the backend server “http://backend-service/svg”, we expect the hostname backend-service to resolve to a reliable IP address that can be used by the frontend.

First let’s deploy the Service ‘backend-service’

kubectl create -f backend-service.yaml

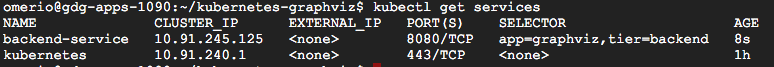

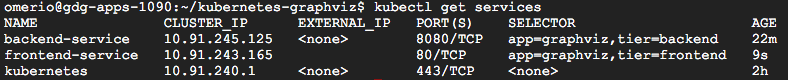

Then verify it’s created by running this command:

kubectl get services

Let’s login to one of the frontend Pods to double check that the frontend containers can actually resolve the DNS name ‘backend-service’. We SSH to any of the nodes since all the 3 nodes are running a replica of the frontend Pod.

gcloud compute ssh gke-graphviz-app-e92dffb2-node-v28i

We then run the following docker command and note the container id:

sudo docker ps

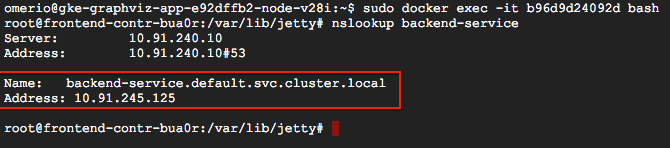

Then we start a shell on this frontend container and perform a DNS lookup for ‘backend-service’:

sudo docker exec -it b96d9d24092d bash nslookup backend-service

(Note: I needed to run ‘apt-get install dnsutils’ since nslookup is not installed on the frontend containers)

As you can see the hostname ‘backend-service’ have nicely resolved to an IP address 10.91.245.125 that the frontend containers can use. This is one of the key capabilities provided by Kubernetes Services.

Deploying the Frontend Service

The frontend service will create an external load balancer to allow Web traffic to the frontend (Pods) web apps. This is external to Kubernetes and is created as a Compute Engine load balancer.

Let run this command to create the frontend service:

kubectl create -f frontend-service.yaml

Now we verify this service is created:

kubectl get services

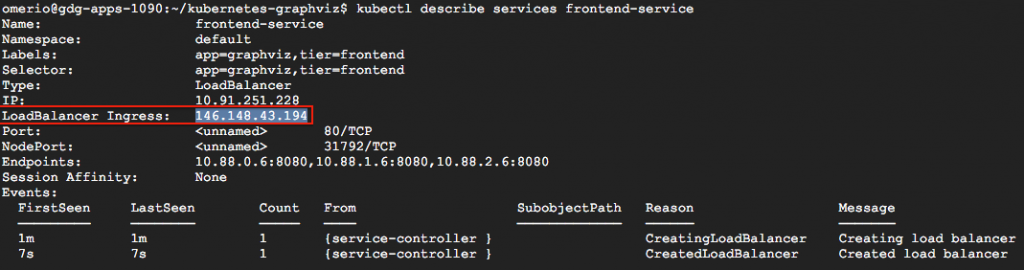

We can use the kubectl describe command to describe this load balancer service

kubectl describe services frontend

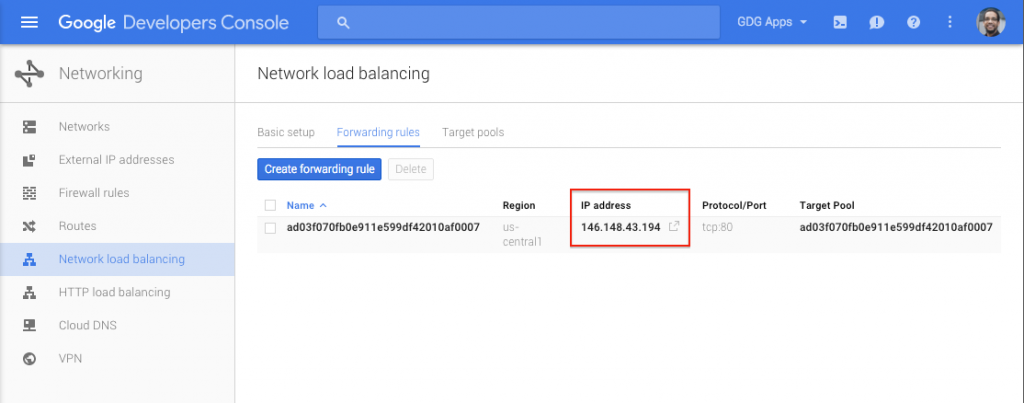

The “LoadBalancer Ingress” shows the external IP address of the load balancer. Also if we navigate to the Network section on the Google Cloud Console, we will see that a Network load balancing entry has been created.

Now the cluster is fully deployed as shown in our initial diagram.

Verifying Services Load Balancing

In this step we will verify that load balancing will actually take place, not just between the frontend Pods, but also the backend Pods. Luckily all the applications deployed on the frontend and backend Pods do output some logs when accessed. We can interactively tail these logs to ensure there is some load balancing.

Here is the webpage we get when we hit the load balancer external IP address, the webpage is loaded from one of the frontend Pods.

When we click the Render Graph button the frontend Pods send requests to the backend-service using the URL http://backend-service/svg. The Kubernetes Service backend-service will provide DNS resolution and load balance between the backend Pods. I’ve demonstrated the load balancing on this video, the three consoles on the top are tailing the logs on the frontend Pods and the two at the bottom are tailing the logs for the backend Pods:

Clean Up

First, we delete the frontend-service Service, which also deletes our external load balancer:

kubectl delete services frontend-service

We then delete the cluster

gcloud container clusters delete graphviz-app

Conclusion

In this post we described the deployment of a Kubernetes cluster on the Google Container Engine for a multi-tiered Web application. Now you are ready to migrate your own applications to containers and deploy them on Google Container Engine. Alternatively, you can continue with tutorials on the Google Container Engine documentations to deploy a variety of other applications. This awesome codelab on developers.google.com is also worth a try. If you have any comments or queries feel free to leave a comment or tweet me.